ROS for Human-Robot Interaction

As of Mar 2022, this documentation page is in very early stage!

We are actively working on expanding the documentation, including Tutorials. For now, the documentation is very sparse.

ROS for Human-Robot Interaction (or ROS4HRI) is an umbrella for all the ROS packages, conventions and tools that help developing interactive robots with ROS.

Contents

ROS4HRI conventions

The ROS REP-155 defines a set of topics, naming conventions, frames that are important for HRI application.

The REP-155 is currently (2022-03-06) under public discussion here: github.com/ros-infrastructure.

Common ROS packages

hri_msgs: base ROS messages for Human-Robot Interaction

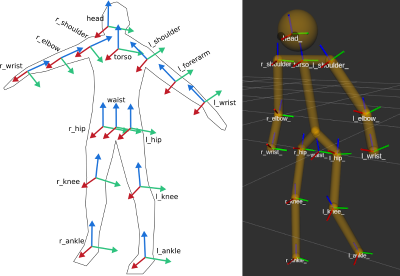

human_description: a parametric kinematic model of a human, in URDF format

libhri: a C++ library to easily access human-related topics

hri_rviz: a collection of RViz plugins to visualise faces, facial landmarks, 3D kinematic models...

Specialized ROS packages

Feel free to add your own packages to this list, as long as they implement the REP-155.

Face detection, recognition, analysis

hri_face_detect: a Google MediaPipe-based multi-people face detector.

- Supports:

- facial landmarks

- 3D head pose estimation

- 30+ FPS on CPU only

Body tracking, gesture recognition

hri_fullbody: a Google MediaPipe-based 3D full-body pose estimator

- Supports:

- 2D and 3D pose estimation of a single person (multiple person pose estimation possible with an external body detector)

- facial landmarks

- optionally, can use registered depth information to improve 3D pose estimation

Voice processing, speech, dialogue understanding

Whole person analysis

hri_person_manager: probabilistic fusion of faces, bodies, voices into unified persons.

Group interactions, gaze behaviour

Tutorials

- Getting Started with ROS4HRI

Covers the basic concepts of ROS4HRI, and build a simple human perception pipeline, with face and body detection