Only released in EOL distros:

Package Summary

nao_openni

- Author: Bener SUAY

- License: BSD

- Source: git https://github.com/WPI-RAIL/nao_rail.git (branch: fuerte-devel)

Contents

Motivation

- This package is the first package of the stack wpi_nao. More packages will hopefully be available soon for human-robot interaction and different robot oriented machine learning applications.

The main purpose is to control humanoid robots (Aldebaran Nao in this case) in the most natural way possible, without wearing any kind of device, using arm and leg gestures. The second purpose is to make teaching new tasks to robots easier, even for people with no robotics knowledge. The ultimate aim is to make contribution to Learning from Demonstration field.

Functionality

- This package is aimed to be more general than just controlling Nao using Microsoft Kinect. It's basically querying a depth camera (Microsoft Kinect in this case), interpreting the user's gestures (angles between limbs), and publishing messages to the robot control node (nao_ctrl in this case).

How To Control the Robot

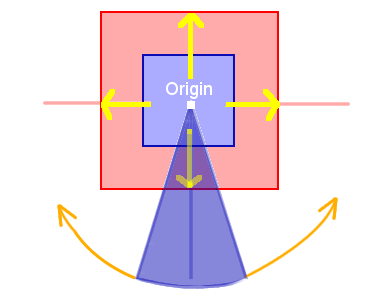

(Left) The location where user switches turns the motors ON, becomes the Origin of the navigation controller. The blue zone shown in the figure is the safe zone where the user's small movements won't affect the robot. When the user steps in the red zone, robot steps in +/- X, +/-Y direction accordingly. Initial pose of the user's shoulders become the zero for the angular motion of the robot. Again, the angular blue zone shown in the figure is safe, but rotation made between the blue lines and red lines would make the robot to rotate around Z axis.

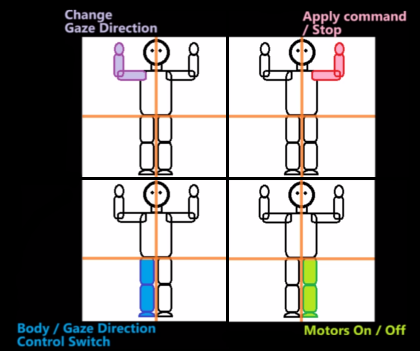

(Right) The robot has two modes. Body Control mode and Gaze Direction Control mode. The user can switch between these modes using his/her right leg. When in Body Control mode, motions shown in the left figure would make Nao navigate. In Gaze Direction Control mode Nao would pay attention to the right arm of the user and rotate its head accordingly. The user then, can use his/her left arm to give an additional command. For now, Nao is rotating and walking towards the direction its looking at, however I hope to add more commands with the help of the community.

Video

To appear at HRI2011 Videos Session

Inside the Package

nao_openni/src/teleop_nao_ni.cpp is the main and the only code for now. It has tons of comments but it still has lots of room for comments. I would be more than so super mega happy if you read, find mistakes, develop the code, help me go through the To Do list.

nao_openni/nao_ni_walker.py is the python code that controls the motion and speech of Nao. It should be copied in the folder PATH_TO_YOUR_ROS_STACKS/FREIBURGS_NAO_PACKAGE/nao_ctrl/scripts. Here, FREIBURGS_NAO_PACKAGE is the package developed by Armin Hornung at The University of Freiburg (see http://wiki/nao).

How to Run the Code

- If you don't have them already, download the packages written in section Dependencies with their dependencies using,

rosdep install PACKAGE_NAME

Copy nao_openni/nao_ni_walker.py to nao_ctrl/scripts/

IMPORTANT NOTE For now please use the version 0.2 of nao stack. I will update my package sometime for the newest version of the nao stack.

- Launch Microsoft Kinect nodes,

roslaunch openni_camera openni_kinect.launch

- If you'd like to see your self being tracked, which is useful when you control the robot,

rosrun openni Sample-NiUserTracker

- Turn on Nao, make sure that it's connected to the network, check it's IP number,

roscd nao_ctrl/scripts ./nao_ni_walker.py --pip="YOUR_NAOS_IP_HERE" --pport=9559

- Make and run nao_ni

rosmake nao_openni rosrun nao_openni teleop_nao_ni

- Stand in standard Psi pose, wait for the nao_ni code to print out "Calibration complete, start tracking user".

- For a short tutorial and to get familiar with the commands, see the video

IMPORTANT NOTE: Calibration of the nao_ni takes (currently) much longer than Sample-NiUserTracker. It's because the message publishing rate for Nao is relatively low. Don't get confused if you're watching your self on "Sample-NiUserTracker". Wait until you see the "Calibration Complete" message on the terminal you're actually running "nao_ni". The plan is to make it independent from publishing rate and thus faster for the next release.

More information

See http://wiki/nao for more information about nao_ctrl and nao_remote (Special thanks goes to Armin Hornung)

See http://wiki/ni for more information about openni (Thanks for everyone who contributed to OpenNI and Microsoft Kinect imports)

Dependencies

The gesture interpretation code is written in CPP, and the following ROS packages are required:

nao IMPORTANT NOTE For now please use the version 0.2 of nao stack. I will update my package sometime for the newest version of the nao stack. Also if you happen to update it, please let me know. I would be happy to use your update.

openni IMPORTANT NOTE This package is deprecated too. I've heard from several people that nao_openni works OK with the new stack though.